In this article:

Containers and jails allow you to make your system more secure, more reliable, more flexible and, at the end of the day, easier to manage. Once you get used to it, it become difficult to conceive to setup a server without such features.

But what are they exactly?

Containers and jails

Containers and jails designate different implementations of operating-system-level virtualization. Like a lot of low-level security features we encounter in today’s world, this functionality can be traced back to the old mainframes, where reliability and parallelism are at the core of the system, and which allow to partition a host system into smaller isolated systems.

This feature then went through commercial Unixes to finally reach open-source operating systems. The first open-source OS to really implement this feature was FreeBSD which offers its jail functionality since 2000 (FreeBSD 4.0). In the mean time there were several more-or-less successful attempts to implement an equivalent functionality in Linux, but Linux users had to wait until 2008 (2014 for the first stable version) for a standardized and upstream supported solution to be available as Linux Containers (LXC).

Linux containers and FreeBSD jails (I will use the term jail to designate both implementations in the rest of this article) are very flexible and allows you to isolate anything from a full-fledged, multi-user environment up to a single process.

But, as long as general server hardening is concerned, where they really shine (IMO) is in their ability to partition a server on a functional basis.

Practical example

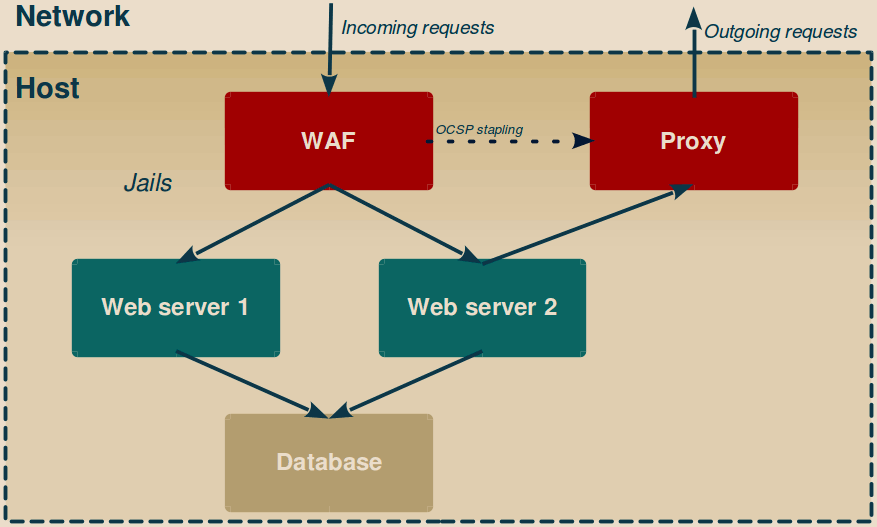

Let’s take the example below. It looks like a classical web server platform with the notable difference that each components, while isolated from each other, are all consolidated inside the same single host.

All incoming and outgoing request are handled by the WAF and the Proxy jails. The idea here is that anything which enters or leaves the host must go through centralized checkpoints, both to enforce a policy and detect suspicious activity.

Actual services run deeper in the server. They have no direct access to the external network, and cannot be directly reached from the outside world either.

This picture is very simplified as normally you will have several other jails gravitating around these main one (I strongly recommend at least one syslog jail to store the log out of jails reach, while technically doable from within the Proxy jail functional separation should encourage you to dedicate another jail for DNS resolution, etc.).

Keep in mind two advantages of jails compared to other virtualization solutions:

-

There is little to no overhead. Running a service directly on the host or running it in a jail consumes the same amount of resources. Similarly, running two services in the same jail or in two different jails doesn’t change anything resource-wise.

-

Jails file-system is available for inspection from the host with no possibility for any process in the jail to fake or hide anything, even with the root privileges.

This allows very-effective Tripwire-like HIDS checks, with both the integrity database stored out of jail reach while still having a direct access to the jails file-systems.

Warning

Manipulating jail files outside of the jail context is always a risky operation. For instance, due to a vulnerability in a new feature of OSSEC, an attacker able to give a specially crafted name to a file was in measure to make OSSEC execute any arbitrary shell command as the root user.

Depending on the enabled features, such HIDS tool doesn’t necessarily need to be executed in the host context. As long as file integrity checking is concerned, it is possible to run the inspection tool in one (or several) dedicated jail(s) where other jails directories are accessed through read-only null mounts.

The WAF jail

The WAF (Web Application Firewall) here usually also does SSL-termination (as you always use HTTPS whenever possible, don’t you?), allowing to store cryptographic material out-of-reach of the web server would it be compromised.

Depending on your exact setup, the WAF may also need an access to the Internet to do OCSP stapling. In this case, don’t grant it a direct Internet access but instead force it to go through the Proxy jail. Always keep things under tight control.

As the main entry point, the WAF also routes validated incoming requests to the correct web server.

Warning

It may be tempting to use the WAF to cache answers. Even micro-caching (caching an answer for a few seconds) would allow to greatly reduce web server load without any noticeable difference in content update reactivity.

However, it is very easy to screw things up at this step and it regularly happens that private or sensitive information (like valid session identifiers) gets cached and then leak, notably in search engines results, making them available to attackers.

Double-check your HTTP headers and cache behavior!

The Web server jails

This example shows two web servers, one (Web server 2) needs access to some external resources while the other (Web server 1) don’t.

Web servers have no direct access to the network and are not directly reachable from the network. This means that if an attacker manages to executes a payload on the web server (be it through PHP code injection or whatever):

-

Opening a listening port will have no effect has the port will never be reachable from the outside.

-

Callback sessions will not work either as outgoing connections requests initiated by the web server are not allowed. As such event should not happen under normal circumstances, an HIDS can even be configured to ring a specific alert at this occasion.

-

Several automated bots payloads rely on a

pingor awgetequivalent to notify the C2 server of a vulnerable host. Here such command will fail, keeping the C2 server from being notified even in case of a effectively vulnerable service (this can save your butt during the short time lapse between a vulnerability disclosure and patch application).

The Proxy jail

The Proxy enforces a whitelist-based policy. Unlike proxies commonly found on networks, this one should not block a few blacklisted addresses and operations, but instead ensure on a jail-per-jail basis that each jail only attempts to access the resources it is expected to access (and optionally that the answer also match expected criterion).

Here you have to think about how to handle SSL connections:

-

You may use end-to-end encryption from the inner jail up to the remote service, but in this case the proxy won’t have access to the communication details between the jail and the remote host.

-

You may use SSL termination on the proxy, this will allow you to enforce tighter rules to control jails communication but in case of a compromise of the proxy jail an attacker will be in measure to intercept all communication going through it.

Personally I decide this on a case-per-case basis with the following rule of thumb:

-

If a jail needs to access or transmit potentially sensitive data to a very narrow subset of trusted websites, then it is usually fine to allow encrypted communication with these websites.

-

If a jails needs to exchange non-sensitive data with various or untrusted websites, then SSL termination on the proxy associated with a tighter checking and logging of the communication is usually preferable.

Note

I’m talking about SSL termination, not SSL interception as there

is no point in encrypting the communication between the proxy and the inner

jail.

While the Squid proxy support SSL termination, not all client tools support

delegating SSL to the proxy (personally I use a customized curl for this

purpose, I will release the patch as-soon-as I have a clean version of it).

Warning

FreeBSD users, note that I’ve found a serious flaw affecting FreeBSD from version 7.0 to 10.3 included (while supported until April 2018, there is no fix planned for this version), as an attacker has a direct access to SHM objects (as used by the popular Squid proxy) from any jail.

In the current example, an attacker would be able to DOS a Squid proxy (and potentially execute arbitrary code in the Proxy jail) from any jail (even from Web server 1 which should normally not be able to interact at all with the Proxy jail).

More details are available in my dedicated article.

The Database jail

At last the databases are stored in jails located even deeper in the host.

As it is not possible, even for the root user, to change or spoof a jail IP, checking the source IP on the database server side is very effective to ensure that each jail only accesses the databases they are supposed to access.

Nevertheless, if an attacker manages to execute arbitrary code in the database jail he will still be in measure to access other websites databases. As long as you keep your system updated, the probability of such an attack is quite low (don’t forget that such attacker must already have taken control of a web server as a prerequisite), nevertheless nothing prevents you from using several database jails to mitigate or get rid of this risk.

Implementation

The example shown here shows a classical web server situation, but this can be expanded to any kind of service and the recipe is always the same:

- Divide the service in functional blocks, describing the communication between each functional block.

- Design each jail as the implementation of the functional blocks.

- Setup the jails on a local interface and control jail communication using the host firewall.

Divide the service in functional blocks

If done correctly, this is what will make your server really easier to administrate as jails will turn into independents, and potentially reusable modules.

If we take the example above:

-

Inter-jail communication is documented from a purely functional point-of-view. Replacing a software by another may become as easy as replacing a jail with another, this does not depend nor affect other jails configuration.

-

If we screw something up in Web server 1 or need to restart it for maintenance purpose, this will not impact Web server 2 activity.

Note

Containers modularity properties opened the way to new software solutions allowing to easily develop, share and install software stacks. A classical example of this is the Docker system.

Bear in mind however that the target of these solutions is easiness, not security.

Use them to accelerate the deployment of new applications if you like, but don’t use them as a “secure containment system”.

Design each jail

Thanks to the isolation between the host and guest systems, it becomes very easy to design and test the jail in a QA environment, try various solutions and settings, etc.

Setup the jails

Here are a few notes about setting up the jail:

-

FreeBSD jails as well as Linux Containers are tied to an IP address and an interface.

- Always use the loopback interface to strictly isolate the jails from the network (even for jails communicating with the outside such as the Waf and Proxy jails in the example above: it’s the host’s firewall job to deliver incoming packets).

- The 127.0.0.0/8 network range associated to the loopback interface allows more than 16 million addresses, feel free to use this range for your jails IP addresses.

- Use a netmask of /32 (255.255.255.255) to avoid any side effects.

-

FreeBSD doesn’t have anything similar to Linux users namespace. It is therefore recommended to assign system-wide unique UIDs to your jail users. Personally I use the jail IP as a prefix for the UID to keep things clear and easy to maintain, but this is my own concoction and you may find a different system to better suit your needs.

Under the hood, LXC also applies the same process by mapping each guest’s UID to a host UID range, but it makes it transparent to the guest system and also associate the guest’s root account to an unprivileged UID (which is very good thing missing in FreeBSD jails).

-

FreeBSD jails are quite secure by default, and offer a few

sysctloptions to tighten the security even further. This is not the same story with Linux LXC where you will most likely need to create your own LXC profile to get something hopefully secure.Most default LXC profiles aim to be easy to deploy and use (see my note above about Docker) and do not attempt to block a potentially malicious actor having taken control of a container. I have good results though by creating Ubuntu jails (Ubuntu is the prime target for LXC development team and most stable profile for now) while relying on the gentoo.moresecure.conf profile to harden the default Ubuntu profile.

-

As a BSD system, FreeBSD has a clear distinction between the base system and third-party applications. This allows to mount the same base system as a read-only file-system into each jail. This improves the security, makes updating easier and allow to save disk space as only third-party binaries and variable data will need to be stored in a jail directory. The result is that a new jail only occupy around 5 MB.

There is no such distinction in Linux where everything is a package, from the system kernel up to the document writer: for now at least each container must have its own complete Linux distribution to work. A minimal Ubuntu LXC container is around 300 MB, which makes Linux containers considerably heavier than their FreeBSD counterparts. Using an embedded distribution such as Alpine Linux as the guest should be a game changer in this regard (an Alpine Linux container is about 10 MB), but last time I checked this Alpine Linux containers were still in early development (buggy scripts and missing files).

-

The jails having no direct Internet connection, traditional update commands will not work anymore. There are three ways to handle software update in your jails:

-

The quickest way is to bypass just enough of the jail isolation for the update process, making the update command very close to a standard update.

In FreeBSD, you achieve this by issuing the

pkgcommand from the host and using its-cparameter to tell it to chroot inside your jail. In Linux, you execute the update command from within the container using thelxc-executecommand, but using a specific configuration file where you do not enable network namespace, thus sharing host’s network namespace.While this should be safe to use on newly created jails, in the long run such command may become unsafe as they create a weakness in the jail isolation (especially the FreeBSD solution).

-

Use a proxy jail allowing communication only with the update sources and, optionally, started only when you are actually applying updates.

While possibly cleaner than the previous solution, personally I don’t like this solution as I prefer my isolated jails to be really isolated: with network access at all.

-

Store your packages in a directory accessed by the jails through a read-only null mount point.

In FreeBSD, such directory is usually filled with customized packages generated using Poudriere. In Linux this depends on the distribution used, on Debian-based systems the

apt-getcommand proposes a-d(--download-only) option allowing to download a package and all its dependency at once (downloaded packages are stored by default under /var/cache/apt/archives).This the safest and recommended solution.

-

Maybe later I will write more step-by-step guide about FreeBSD jails and Linux Containers, but for now that’s all folks!